Over the past decade, everyone has been chasing the new, shiny tool: Artificial Intelligence. After all, it does what humans have been chasing since we learned to use fire—ways to make our lives easier.

Despite the long list of pros, AI comes with a label warning that business leaders tend to ignore—‘use with caution.’ This got business and industry experts debating the risks vs. rewards of using AI—and a way to win the tug-of-war, its potential, and pitfalls.

Poking Schrödinger’s cat

When it comes to AI, the concerns are more than what meets the eye. With all the buzz around it, we don’t blame business leaders for wanting to dip their feet in AI waters. Despite a long list of pros, swimming through uncharted territory without knowledge of the uncertainties is certainly a risk.

Think of it like Schrödinger’s cat. Until you implement it, there’s no way to tell if AI risks exist. At any given time, it can simultaneously be either a risk or not.

So what do you do? Not embrace AI while your competitors are? Of course not. Despite the uncertainties, working with fear is not a solution.

The right step is to know the enemy to eliminate them before it attacks you.

Cat’s out of the box: understanding the risks

Before we tell you how to win the tug of war with risks, let’s know the enemy first. We will keep this brief.

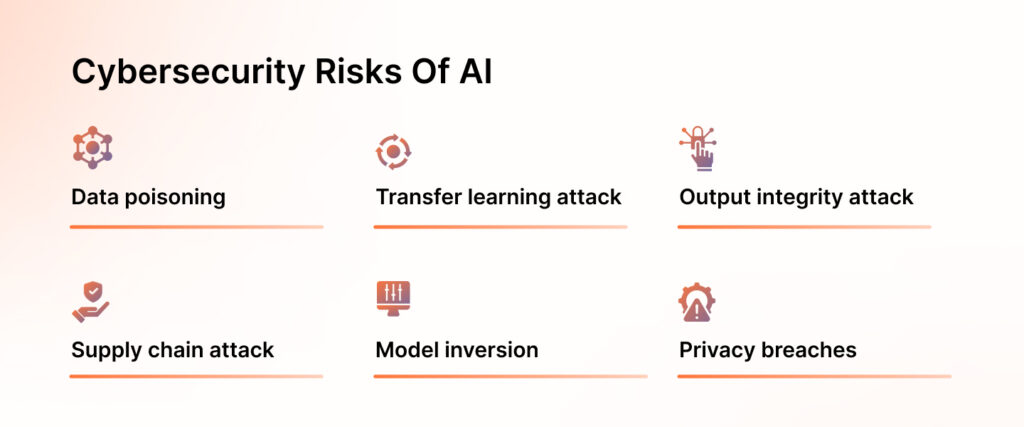

- Data poisoning, also known as AI poisoning, manipulates and attacks data used for training AI models. It works by tricking AI to make incorrect predictions, which prevents the AI models from recognizing the attacks.

- Transfer learning attack: Occurs when attackers use the training learnings from a pre-existing model to train a similar model. This results in misclassifications and undesirable behavior in the second model.

- Output integrity attack: A deliberate attempt by an attacker to manipulate or modify the outcomes of machine learning models to compromise the results.

- Supply chain attack: This involves injecting malicious components into ML models or datasets during development, deployment, or updates to compromise their integrity. This can result in biased predictions, data leaks, or system vulnerabilities.

- Model inversion: A security threat in machine learning models where attackers use the data fed to the system to train the model and steal sensitive information.

- Privacy breaches: AI modules can access sensitive private information for training purposes, which can invariably violate security regulations that apply to your business.

Raining cats and compliances

No legislation exists in the United States to tame AI’s largely unregulated landscape. The California AI Safety Bill was the closest to becoming a federal law.

This, however, did not stop AI frameworks from popping up. Thanks to AI taking over everything, many regulations have also emerged in recent years to combat the growing ethical and privacy concerns. It was raining cats and compliances. Some of these are

- Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence. This executive order, passed by the White House, focuses on federal agencies and developers using powerful AI systems.

- NIST AI RMF is a voluntary framework developed to help businesses develop, design, and adopt AI products in a trustworthy manner.

- ISO 42001, an international standard, specifies requirements for establishing, implementing, maintaining, and improving Artificial Intelligence Management Systems (AIMS) within an organization.

- The Artificial Intelligence Act, passed in August 2024 by the European Union, calls for stringent governance and risk management.

Embracing standards and frameworks like the ones above helps you reap the benefits of AI while minimizing the risks.

How to train your cat 101: building a culture of security

While AI is smart enough to understand intellectual tasks, at the same time, it is dumb enough to introduce risks to your system. In security, prevention is better than cure. As a CISO, here are some preventive measures to consider:

- Transparency: Transparency is critical if you are working with third-party developers or service providers. Unless you have a full picture of the vulnerabilities and risks they can introduce to your environment, we recommend you avoid them. Before onboarding new partners, communicate and collaborate with them to share your concerns.

- Human oversight: As we stated above, AI can be smart and not so smart and not so smart at the same time. AI systems operating with zero human intervention is a recipe for disaster—the right way to approach it with the assumption that something can go wrong. Scrutiny for issues like bias, check for AI hallucinations and verify the data being fed into it.

- Continuous risk monitoring: Better safe than sorry. Continuously monitor AI systems for security vulnerabilities. We recommend using an automated risk monitoring tool like Sprinto.

- AI model watermarking: Involves using watermarking and fingerprinting techniques to identify AI models. This helps to identify instances of model theft and track unauthorized modifications.

Here’s Kelly Hood, EVP & Cybersecurity Engineer, walking us through the strategies for balancing AI adoption with risk management.

Embrace AI, not the risks

AI governance is about building and managing AI systems with purpose and responsibility. If your organization operates under data privacy regulations or other frameworks, ensuring your AI aligns with these rules to minimize risks is essential. This is where tools like Sprinto make a difference by automating much of the heavy lifting.

With Sprinto, you can streamline critical GRC tasks, such as conducting internal risk assessments, managing controls continuously, and staying aligned with privacy frameworks like GDPR or CCPA. The platform also monitors for potential data risks or privacy issues, sending automated alerts so you can act swiftly and proactively.

Don’t leave compliance to chance. See Sprinto in action and start building trust while simplifying your path to AI compliance.

Anwita

Anwita is a cybersecurity enthusiast and veteran blogger all rolled into one. Her love for everything cybersecurity started her journey into the world compliance. With multiple certifications on cybersecurity under her belt, she aims to simplify complex security related topics for all audiences. She loves to read nonfiction, listen to progressive rock, and watches sitcoms on the weekends.

Explore more

research & insights curated to help you earn a seat at the table.